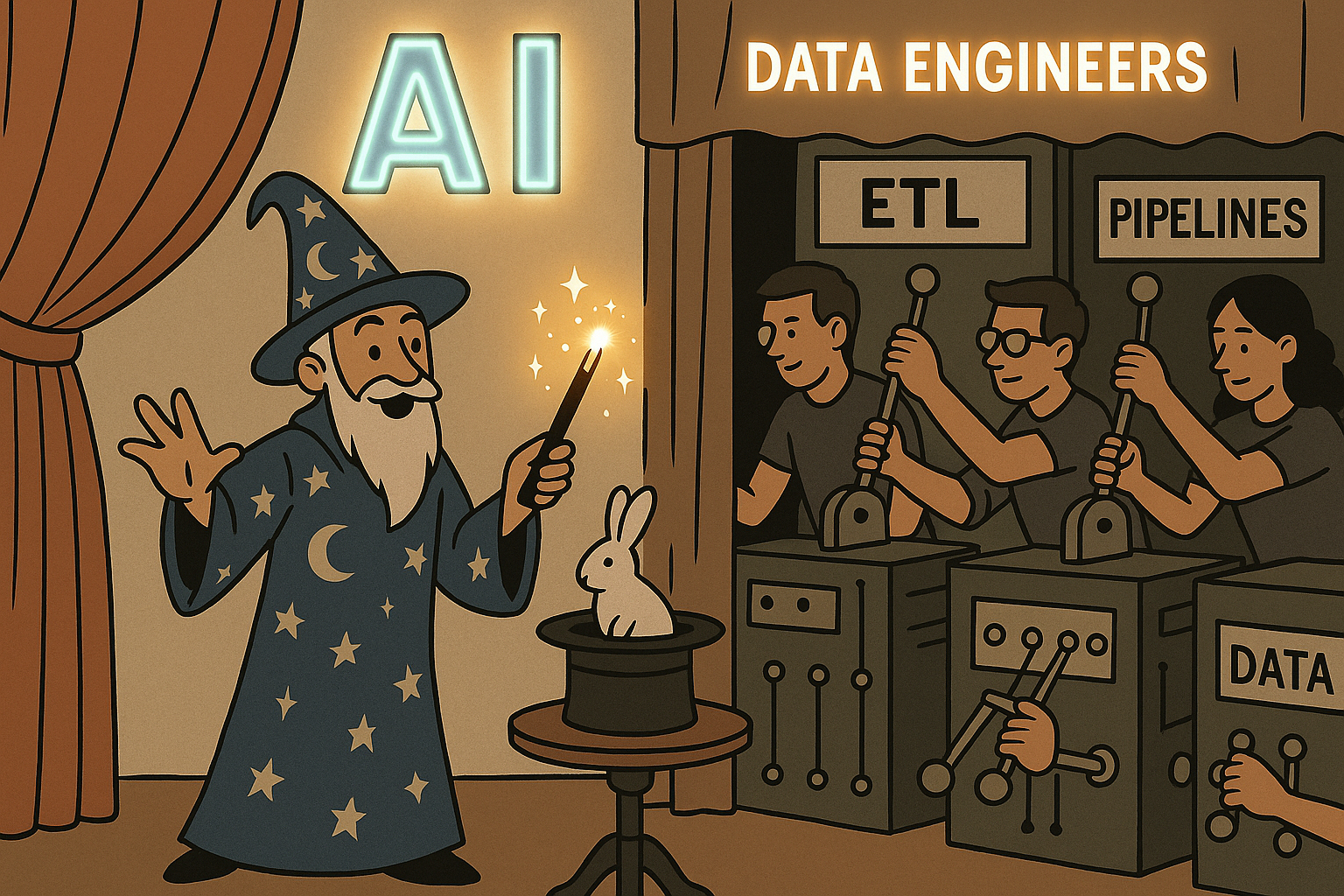

The AI revolution isn’t really about AI—it’s about data engineering wearing a shiny new suit.

The Great Rebranding

Walk into any tech conference today and you’ll hear buzzwords flying: GenAI, Agentic AI, AI Engineering, LLMOps. The hype is real, the funding is flowing, and everyone’s racing to slap “AI” on their job titles. But strip away the marketing veneer, and you’ll find something familiar underneath: good old-fashioned data engineering.

It’s not that these AI innovations aren’t real or valuable. They absolutely are. But the infrastructure that makes them possible? That’s data engineering, evolved and specialized for a new era.

The Hidden Truth

Here’s the uncomfortable truth about AI applications: roughly 80% of the work is data engineering. The other 20%? That’s where the AI magic happens. But without that 80%, the magic doesn’t work at all.

GenAI Applications: Data Pipelines in Disguise

Take Retrieval-Augmented Generation (RAG), the backbone of most modern AI applications:

- Document ingestion: ETL pipelines that parse, clean, and normalize text from various sources

- Chunking strategies: Data transformation logic that splits documents into semantically meaningful pieces

- Vector embeddings: Feature engineering for high-dimensional spaces

- Vector storage: Specialized databases that are still, fundamentally, databases

- Retrieval systems: Query engines optimized for similarity search

Sound familiar? It should. This is a data pipeline with vector databases instead of relational ones.

Agentic AI: Orchestration at Scale

Agentic AI systems that can use tools and make decisions are impressive, but they’re built on:

- Tool integration: API management and service orchestration

- State management: Distributed systems keeping track of conversation context and agent memory

- Workflow orchestration: Pipeline systems that route requests and manage dependencies

- Monitoring and observability: Tracking agent performance, tool usage, and system health

This is distributed systems engineering and workflow orchestration—core data engineering competencies.

The New Data Engineering Stack

The AI era hasn’t obsoleted data engineering; it’s expanded its toolkit:

- Traditional Data Engineering

- SQL databases

- ETL pipelines

- Data warehouses

- Batch processing

- Monitoring dashboards

- AI-Era Data Engineering

- Vector databases (Pinecone, Weaviate, Chroma)

- Embedding pipelines

- Real-time inference serving

- Stream processing for live data

- Model performance monitoring

- Multi-modal data handling

The principles remain the same: move data reliably, transform it correctly, serve it fast, and monitor everything.

Why This Matters for Organizations

Organizations rushing to implement AI often underestimate the data engineering requirements. They see the demos, get excited about the possibilities, and then hit the wall of reality:

- Data quality issues: LLMs amplify garbage-in-garbage-out problems

- Scalability challenges: Vector similarity search at scale is hard

- Latency requirements: Users expect sub-second response times

- Cost management: LLM inference and vector storage aren’t cheap

- Reliability needs: AI systems must be production-ready, not just demo-ready

Companies that succeed in AI aren’t necessarily those with the best ML scientists (though they help). They’re the ones with solid data engineering foundations.

The Skills That Actually Matter

If you’re building AI systems, you need solid data engineering foundations: pipeline orchestration, real-time processing, database expertise, API design, and monitoring. The AI-specific additions—vector operations, model serving, prompt engineering—are extensions of these core skills, not replacements.

The Road Ahead

As AI systems become more sophisticated, the data engineering requirements will only grow. Multi-agent systems need complex orchestration. Multimodal AI requires diverse data pipelines. Real-time personalization demands low-latency serving. The foundation remains the same: reliable data movement, transformation, and serving.

Conclusion: Embrace the Foundation

The AI revolution is real, but it’s not magic. It’s engineering—specifically, data engineering adapted for a new generation of applications. The companies and engineers who recognize this will build more reliable, scalable, and successful AI systems.

So the next time someone asks you about AI engineering, remember it’s data engineering with better marketing. And that’s not a bad thing—it’s exactly what we need to build the AI future we’re all excited about.

The future belongs to those who can move data fast, transform it reliably, and serve it at scale. In other words, the future belongs to data engineers.

Author

-

As the leader of Data Science Initiatives at Brillersys, he brings over 12 years of expertise in designing and deploying data-intensive applications and machine learning models. He focuses on solving complex business challenges and enhancing data-driven decision-making across various industries and domains.

View all posts Lead Data Scientist | Machine Learning Engineer

Leave a Reply